Introduction: The BCI Ethics Frontier

As brain-computer interfaces (BCIs) transfer from lab to market, BCI ethics questions turn out to be unavoidable. Who owns your ideas? What occurs when neural information is commodified? And the way can we defend autonomy when machines learn the thoughts?

This text explores the moral challenges confronted by three main gamers: ELVIS Applied sciences (Russia), Neuralink (USA), and Synchron (USA/Australia). Every is pioneering breakthroughs—however with these come obligations.

1. BCI Ethics – Knowledgeable Consent and Person Autonomy

Consent in BCI is uniquely advanced. Sufferers or customers should perceive:

- What information is being collected

- What the machine will management

- Whether or not their ideas are saved or transmitted

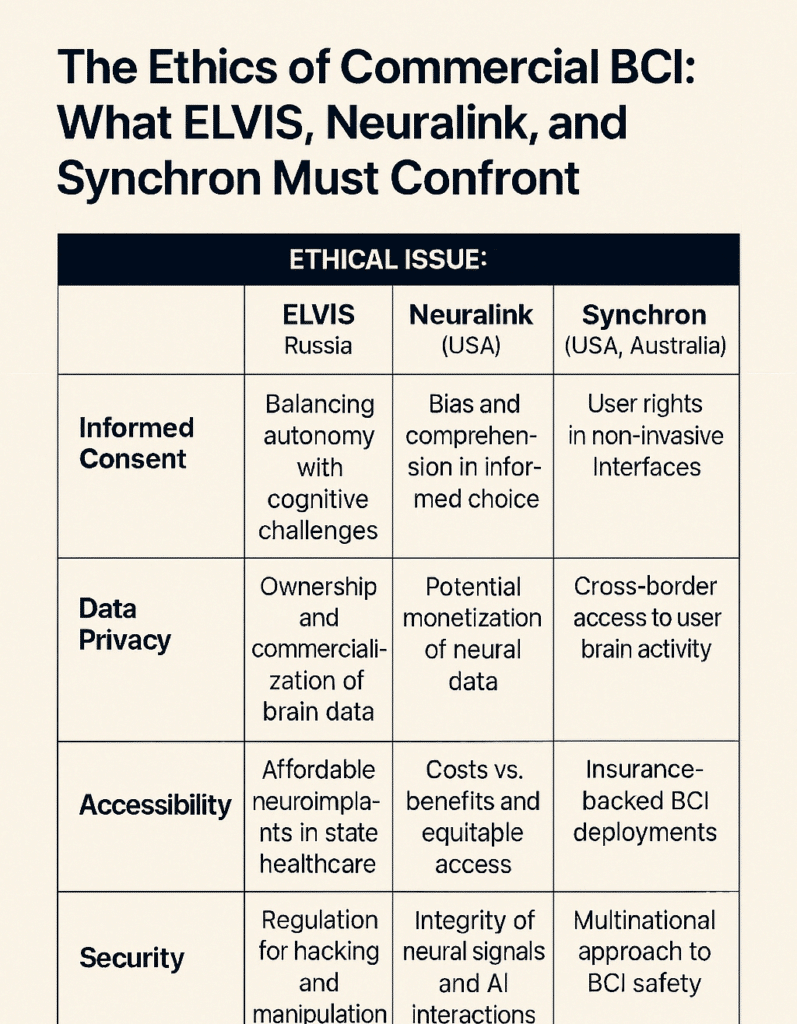

Neuralink makes use of superior robotics and claims excessive ranges of person management—however little is publicly recognized about its consent frameworks. Synchron, working with tutorial hospitals, follows strict IRB (Institutional Overview Board) protocols. ELVIS, working largely in Russia, has no printed knowledgeable consent procedures in worldwide journals.

Perception: Synchron at the moment demonstrates probably the most clear moral safeguards round person autonomy.

The problem deepens when BCIs are provided to people with cognitive impairments. Figuring out their capability to supply significant consent requires extra layers of safety, counseling, and doubtlessly third-party advocacy.

2. Neural Information Possession and Privateness

Probably the most important moral points is: who owns the information?

- BCIs can seize not simply motion alerts, however feelings, impulses, and intention.

- If saved or transmitted, this information may very well be accessed by third events.

Neuralink has not detailed the way it will safe person information or if it can commercialize it. ELVIS is opaque on this entrance, whereas Synchron has printed protocols that prioritize information anonymization and hospital-level safety.

Key Concern: In nations with out strong information safety legal guidelines, like Russia and even elements of the U.S., there’s a danger of neural surveillance if safeguards aren’t enforced.

The commodification of neural information additionally raises long-term questions. Might mind information be purchased, bought, or used to affect conduct? The traces between medical data, behavioral information, and advertising insights could blur.

3. Enhancement vs. Remedy: The Line Between Assist and Hype

Whereas all three corporations at the moment goal medical wants (paralysis, blindness, listening to loss), the potential for cognitive enhancement looms:

- Reminiscence boosting

- Emotion regulation

- Accelerated studying or decision-making

Neuralink brazenly speaks about long-term targets of merging with AI, which raises philosophical and moral considerations. ELVIS and Synchron haven’t promoted enhancement however could face pressures to develop past therapeutic use.

Moral Dilemma: Ought to BCI corporations be allowed to market merchandise that alter cognition in wholesome customers?

Furthermore, enhancement purposes may exacerbate inequalities. If solely the rich can entry cognitive upgrades, societal gaps may widen—undermining democratic rules and workforce equity.

4. Accessibility and International Fairness

Ethics additionally entails who will get entry.

- Will solely the wealthy have entry to enhanced cognition?

- Will war-torn or underfunded areas be testbeds for unregulated experimentation?

Synchron, by partnerships with hospitals and public well being programs, reveals potential for equitable deployment. Neuralink, a personal firm, could worth out most customers. ELVIS may turn out to be the low-cost various for Eurasian markets—however dangers utilizing populations with fewer protections as check teams.

Perception: True BCI ethics should prioritize world fairness, not simply elite efficiency.

To realize significant moral fairness, corporations might have to supply tiered pricing, public subsidies, or world licensing methods geared toward affordability. With out such plans, BCI may mirror the digital divide seen in web entry.

5. Twin-Use and Militarization Dangers

All BCI applied sciences have potential navy purposes:

- Cognitive management of weapons

- Thoughts-to-mind communication

- Emotion detection in interrogation settings

Neuralink and ELVIS have each been related to national-level innovation packages, which may very well be leveraged by protection sectors. Synchron, whereas much less publicized on this area, nonetheless operates inside geopolitical programs the place dual-use is a priority.

Coverage Hole: There’s at the moment no world framework limiting the weaponization of BCI know-how.

There are rising considerations that the identical neural interfaces used for therapeutic may very well be tailored for surveillance, psychological operations, or fight augmentation. This raises pressing requires multilateral agreements governing peaceable purposes solely.

BCI Ethics Conclusion: Towards an Moral Framework

As industrial BCIs transfer ahead, moral management shouldn’t be elective. It should embody:

- Clear information insurance policies

- Licensed consent frameworks

- Public oversight of medical trials

- International norms round enhancement and dual-use

Synchron at the moment leads in public transparency and analysis ethics. Neuralink leads in imaginative and prescient, however should make clear information rights. ELVIS should transfer towards internationally accepted moral requirements to realize legitimacy.

The way forward for neurotechnology received’t simply be formed by innovation—it will likely be outlined by integrity.

The businesses that succeed long-term shall be those that align technological breakthroughs with societal belief, public accountability, and a imaginative and prescient for inclusive, moral deployment. And not using a ethical framework, the promise of BCI dangers turning into peril.